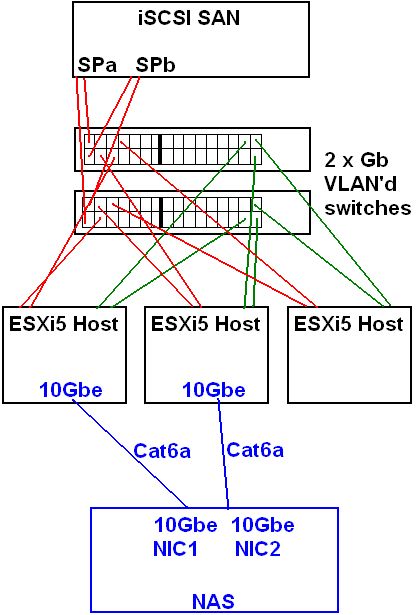

We have a 3 node iSCSI SAN-backed vmware cluster and would like to supplement the SAN with some extra NAS storage.

The NAS storage should run over 10Gbe to provide added performance too.

However 10Gbe switches are still beyond our budget and 10Gbe direct connecting at least 2 of the virtual hosts to the NAS is considerably cheaper.

This works quite well until you consider failover/vmotion; as each virtual host server sees the NAS target as a different IP address (because it's a separate NIC on the NAS), you cannot automatically fail over NAS hosted VM's to another host.

Also when you attempt to vmotion guest VM's stored on the NAS to another host, the same datastore is not available because the same NAS target IP is not available on the second host.

I guess this can be implemented by configuring an active-active type port trunk on the NAS' dual port 10Gbe NIC.

Before steaming ahead I'd like to hear if anyone else has done this and how it worked in reality.

Below is a rough sketch of the environment with the proposed NAS configuration in blue