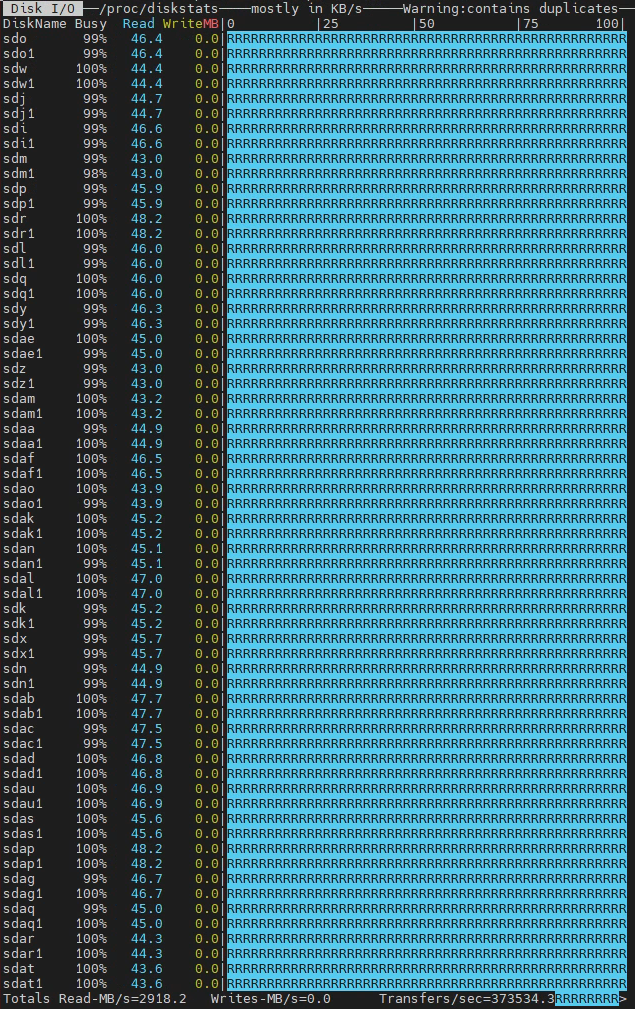

We're in the process of an Oracle bare metal (ODA) to VMWare/Netapp migration project. The netapp is an all NVME SSD SAN array. ESX servers are dell R750s with 2 HBAs each. We have carved up 32 "disks" (1TB each) on the netapp to present to the ESX host which are served to the linux guest via VMDK (not using RDM). When we are running the SLOB benchmark tool, we can see the linux host making use of all 32 ASM disks (as it sees them):

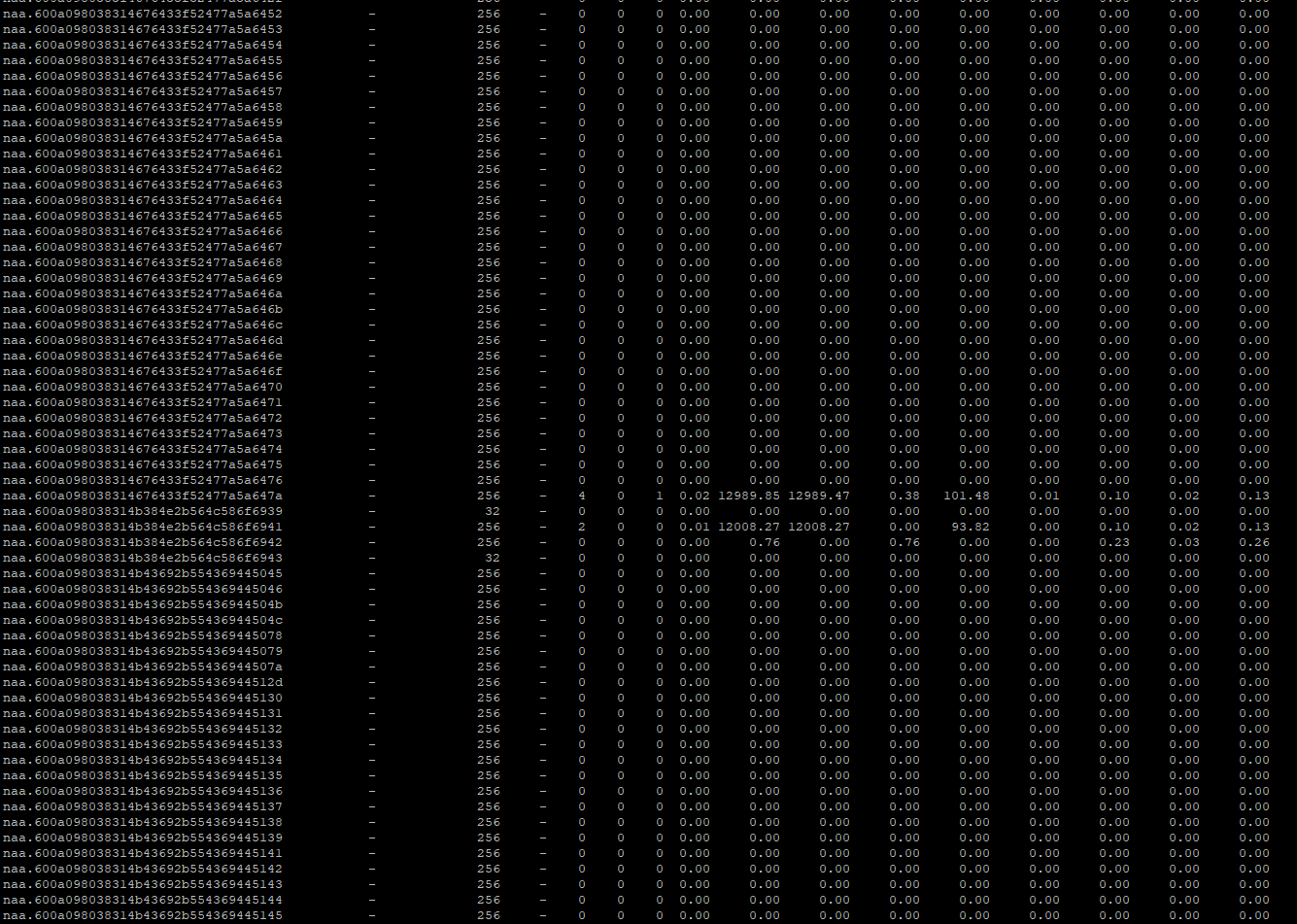

But when the ESX admin looks at the disks at the ESX layer, he is only seeing 2 busy disks:

Is this normal? Do we have some bottleneck due to how we have the disks presented to ESX? Not sure how disks/luns from the netapp should appear on the ESX host?

Thanks

Wayne