By now most of us are familiar with the concept of the Data Lake. EMC’s recent launch of IsilonSD and their DataLake 2.0 strategy was a great opportunity to revisit our own needs.

The data lake is distinguished from a traditional data-store by being a single repository for unstructured data, as opposed to being a purpose built data-store with clearly structured meta-data, management and prioritization. Lacking this metadata structure and management, the Data Lake seems an unlikely candidate for useful data correlation and intelligence. Enter the magic of large numbers! While a small repository of unstructured files is an exercise is organized chaos, a large pool offers something special. Trends, intelligence and insight emerge from large pools of data especially dealing with real-time, organic processes that are constantly adapting. Even in environments where epiphany through analytics is not the expectation and it’s still simply a landing place for unstructured data such as imagery and design files, the advances driven by Data Lake design are benefitting storage users.

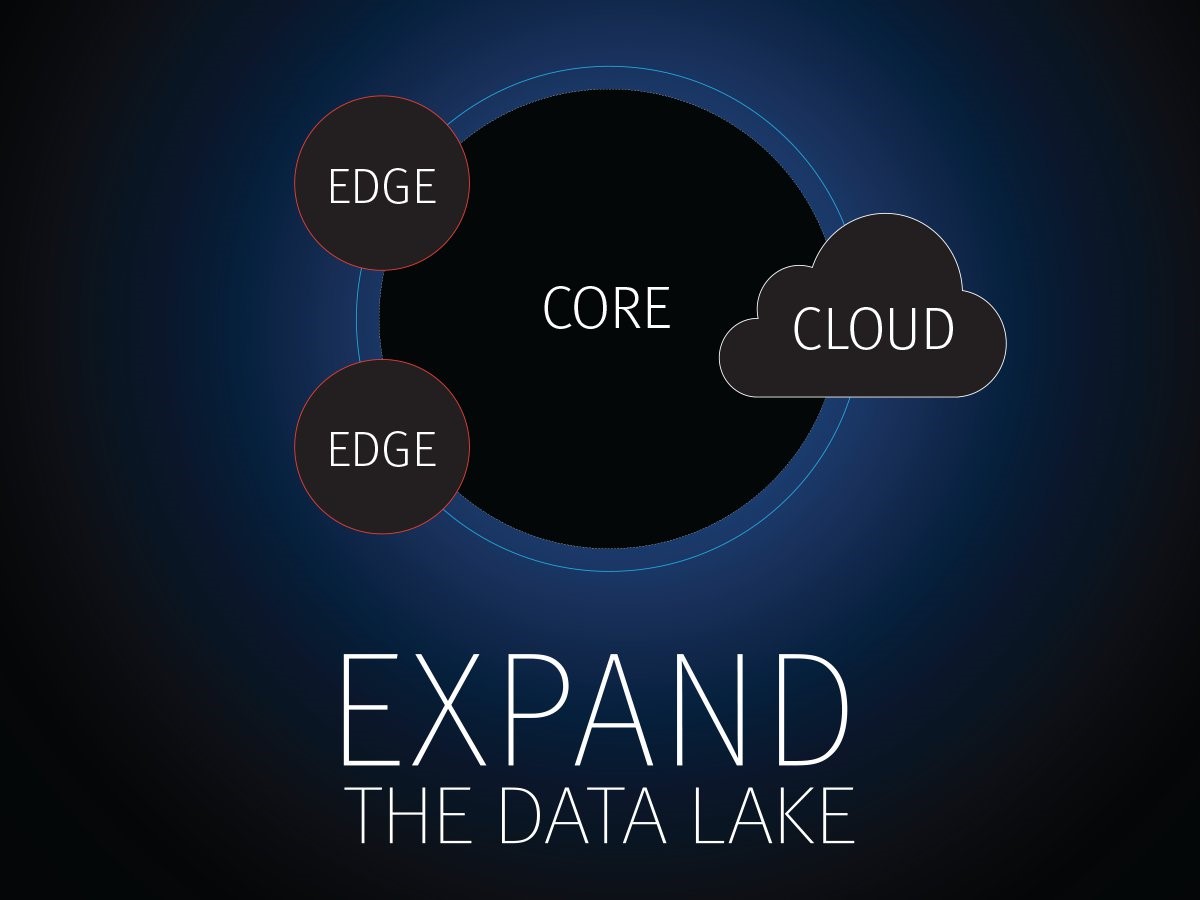

In our own Briefing Center and Floor Exhibit environment we had a clear gap; our Data Lake needed to pull in as much unstructured data as possible from real-time participants and use cases in order to provide intelligence as well as run previously prepared demos from the same equipment, yet a significant number of physical sites in our design were outside our primary HQ focus , leaving many remote and temporary locations outside our solution.

Essentially, these activities and sources of unstructured data were outside the edge of the Data Lake we had defined. This was a natural outcome from our operational process, but limited the scope and value of our pool of data. It was not until we started re-analyzing how to better manage these sites that the limitation was faced head on.

Our project started with a need to look at how to best serve remote locations which could be distant, mobile, and often temporary. As we looked at options it was clear that we needed a Software-Defined storage (SDS) solution. This was driven by a single key need: an affordable solution that could handle the strain of frequent travel. Classic equipment is intended to be shipped once, maybe even a few times. Constant travel and repeated installation needed specially hardened equipment which featured an especially increased price. Even then, no show floor armor would have stopped the forklift arm that went straight through one rack, destroying an entire shelf which could not be physically re-created on-site. We needed the environment to be distributed, robust, and in our target price range. Our answer was to look at Software defined storage solutions, with an option to leverage available hardware that met our unique needs.

Unfortunately, the selection process for SDS Storage just presented more, but not better, options. However, once we applied a few criteria we quickly came down to a single answer. These remote sites are often not staffed by storage or file system experts. The addition of the SDS solution cannot turn into a tuning, management or operation burden to the users. We needed robust tools that were production grade with as much automation and workflow features as possible built in. Looking at these needs and leveraging our Connetrix VDX based Isilon cluster the final design selection came down IsilonSD to replicate back to our Isilon cluster running on VDX.

Really getting to our goal, however would rest entirely on managing the workflows. Breaking down the workflow into simple steps and getting reliable tools to manage the complexity of each phase became the mission. A great benefit of Isilon SD was the ability to use SyncIQ to automatically create a process to replicate and push data to the remote sites based on our unique needs. At the core we could leverage the EMC Connectrix VDX to build a VCS Fabric that can expand and adapt with auto provisioning and load balancing.

The key for the core of the Data Lake was that we needed native automation to ensure minimal overhead. The final layer came through adding in many of the tools associated with the New IP. This included leveraging our Brocade vRouter and its ability to act as a virtual VPN and firewall. It also included leveraging EMC Cloud Pools to allow lower priority data to be managed in the cloud.

The result was a first step toward an Edgeless Data Lake. By using a software defined solution to bring in activity at a wide number of lower priority sites, using Isilon Sync IQ to drive replication workflows, and using Connectrix VDX to leverage native automaton for simplified network workflows, we found the right tools to manage the process. Of course this is just a start. How do we further extend our workflows based on the business needs, how do we adapt our analytics as we collect insight, how do we achieve higher levels of business agility? Limitless possibilities as we evolve on the steps for the New IP.

For additional information on this design you can reference the video use case with EMC Isilon.

You can find additional information on the New IP architecture here:

New IP Architecture (with link)

#UnstructuredFiles#storage#isilon#NewIP#BrocadeFibreChannelNetworkingCommunity#DataLake#vdx#emc