I am setting up a new vSphere 6 environment with 2 ESXi servers and for the shared storage I am using a Windows Server 2016 box with Starwinds Virtual SAN. Each server has a quad 1Gb NIC in it which is dedicated to iSCSI traffic and is connected to a single Cisco SG300-28 switch. I have a VLAN created for the iSCSI storage traffic and have Round Robin MPIO enabled/configured.

The problem is I'm getting awful storage performance. To troubleshoot this I ended up connecting the 4 x 1Gb NICs in the ESXi server directly into the SAN server. Here are the results:

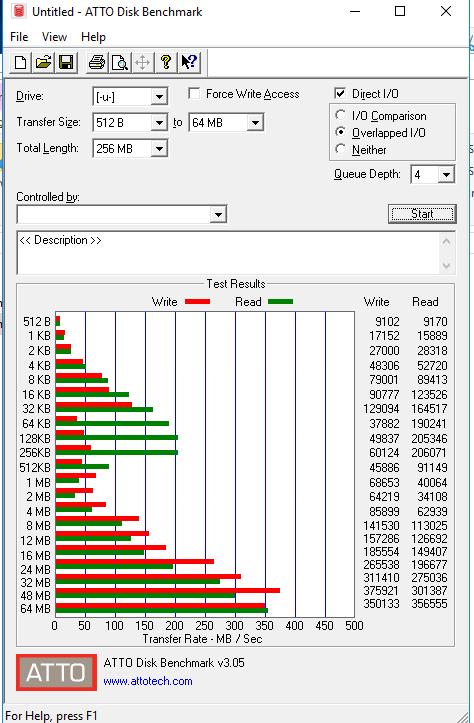

ESXi server connected to Cisco SG300-28 switch:

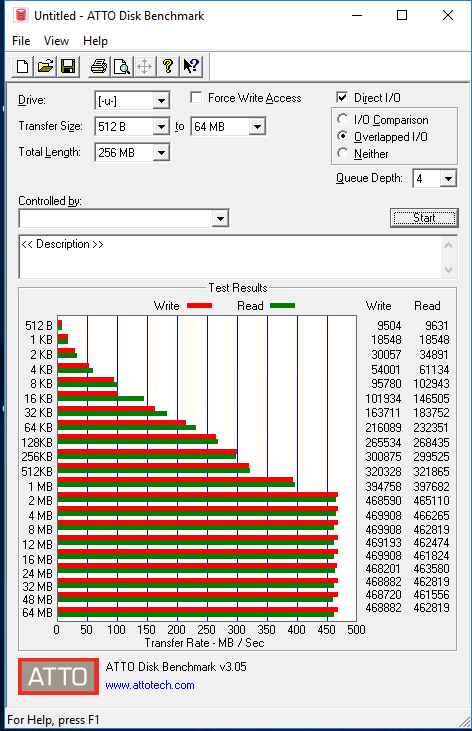

Running cables directly between the ESXi server and SAN:

As you can see there is a huge difference in performance! When the ESXi host is directly connected to the SAN I can also see that file copies in the VM and general copy operations work quite quickly but when connected to the switch again drop to well under 80MB/s.

Is there any reason for the poor performance with the Cisco SG300-28 switch? I'm not using jumbo frames but is there anything I need to configure on the switch or in vCenter to get iSCSI performance working like it should? I'm using network port binding for iSCSI.

This is a home lab so there is literally no load on this switch and I only have two VMs running at the moment, vCenter and a test Windows VM.

I am totally baffled as to why the iSCSI performance would be so bad when I have read such good things about this switch being used in home labs!