Beside Triton, the NVidia tao toolkit might be interesting as well. Here some provisioning info.

Step 1: packages installation

Execute the following commands.

tdnf install -y wget unzip python3-pip

pip3 install virtualenvwrapper

export VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3

source /usr/bin/virtualenvwrapper.sh

mkvirtualenv -p /usr/bin/python3 launcher

pip3 install jupyterlab

pip3 install nvidia-tao

Install the NVidia GPU Cloud cli as well.

# NGC installation with md5 check output

wget --content-disposition https://ngc.nvidia.com/downloads/ngccli_linux.zip && unzip ngccli_linux.zip && chmod u+x ngc-cli/ngc

find ngc-cli/ -type f -exec md5sum {} + | LC_ALL=C sort | md5sum -c ngc-cli.md5

echo "export PATH=\"\$PATH:$(pwd)/ngc-cli\"" >> ~/.bash_profile && source ~/.bash_profile

Step 2: download the tao samples

Now we download the tao samples with pretrained ML models.

wget --content-disposition https://api.ngc.nvidia.com/v2/resources/nvidia/tao/cv_samples/versions/v1.2.0/zip -O cv_samples_v1.2.0.zip

unzip -u cv_samples_v1.2.0.zip -d ./cv_samples_v1.2.0

cd ./cv_samples_v1.2.0

mkdir ./cv_samples_v1.2.0/detectnet_v2/data

For later use, we create two directories as well.

mkdir -p /workspace/tao-experiments/detectnet_v2

mkdir -p /workspace/tao-experiments/data/training

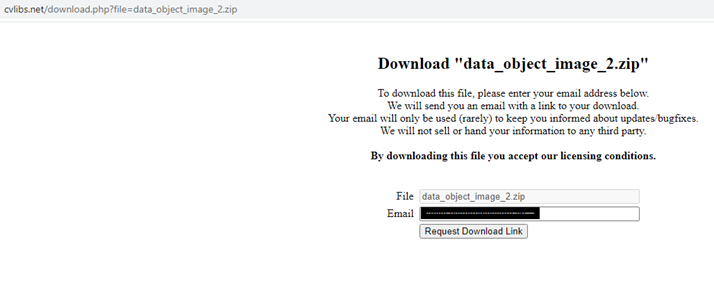

The tao samples contain jupyter notebook files which need data object images and label data. There are two files which can be obtained from

http://www.cvlibs.net/download.php?file=data_object_image_2.zip

The zip file size is 11.7GB.

http://www.cvlibs.net/download.php?file=data_object_label_2.zip

The zip file size is 5.5MB.

Simply copy the zip files e.g. per winscp to the photon os vm into the directory ./cv_samples_v1.2.0/detectnet_v2/data .

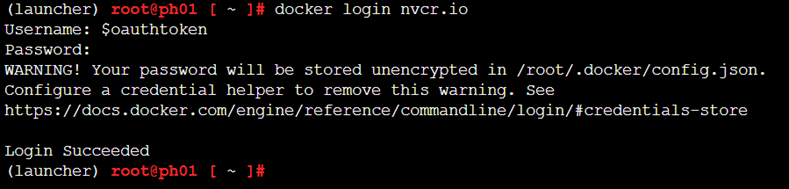

Step 3: docker login

docker login nvcr.io

If the docker daemon is not started, execute systemctl start docker.

The docker hub login is used in a jupyter notebook.

Step 4: yupiter notebook configuration

The jupyter notebook will be published on port 8888. Hence we have to open that port.

iptables -A INPUT -i eth0 -p tcp --dport 8888 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 8888 -j ACCEPT

iptables-save >/etc/systemd/scripts/ip4save

Start the jupyter notebook .

cd ./cv_samples_v1.2.0/

/root/.virtualenvs/launcher/bin/jupyter notebook --allow-root --ip 0.0.0.0 --no-browser

On the screen you will get a similar output as below.

[W 2022-08-16 14:57:33.899 LabApp] 'ip' has moved from NotebookApp to ServerApp. This config will be passed to ServerApp. Be sure to update your config before our next release.

[W 2022-08-16 14:57:33.906 LabApp] 'allow_root' has moved from NotebookApp to ServerApp. This config will be passed to ServerApp. Be sure to update your config before our next release.

[W 2022-08-16 14:57:33.911 LabApp] 'allow_root' has moved from NotebookApp to ServerApp. This config will be passed to ServerApp. Be sure to update your config before our next release.

[I 2022-08-16 14:57:33.925 LabApp] JupyterLab extension loaded from /root/.virtualenvs/launcher/lib/python3.10/site-packages/jupyterlab

[I 2022-08-16 14:57:33.929 LabApp] JupyterLab application directory is /root/.virtualenvs/launcher/share/jupyter/lab

[I 14:57:33.937 NotebookApp] Serving notebooks from local directory: /

[I 14:57:33.939 NotebookApp] Jupyter Notebook 6.4.12 is running at:

[I 14:57:33.942 NotebookApp] http://ph01:8888/?token=1471fe6c3147473cbb94f59b8071e1caccf80a655aeca50b

[I 14:57:33.945 NotebookApp] or http://127.0.0.1:8888/?token=1471fe6c3147473cbb94f59b8071e1caccf80a655aeca50b

[I 14:57:33.948 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

To access the notebook, open this file in a browser:

file:///root/.local/share/jupyter/runtime/nbserver-873-open.html

Or copy and paste one of these URLs:

http://ph01:8888/?token=1471fe6c3147473cbb94f59b8071e1caccf80a655aeca50b

or http://127.0.0.1:8888/?token=1471fe6c3147473cbb94f59b8071e1caccf80a655aeca50b

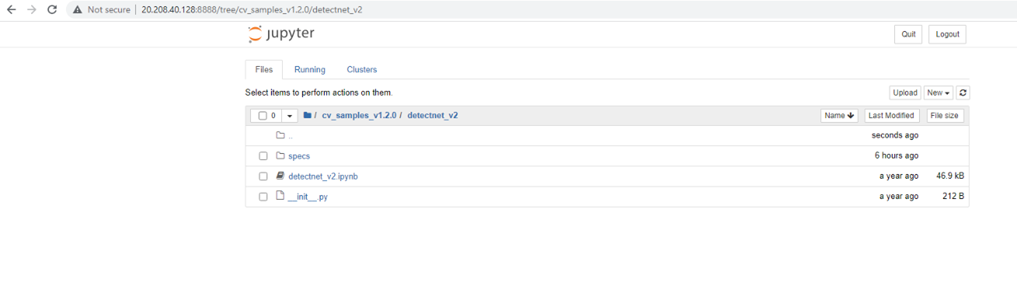

Open a web browser and insert ip and tokenID, example:

http://20.208.40.128:8888/?token=1471fe6c3147473cbb94f59b8071e1caccf80a655aeca50b

Browse to the directory CV_Samples_v1.2.0 > Detectnet_v2 .

Open the jupyter notebook file detectnet_v2.ipynb.

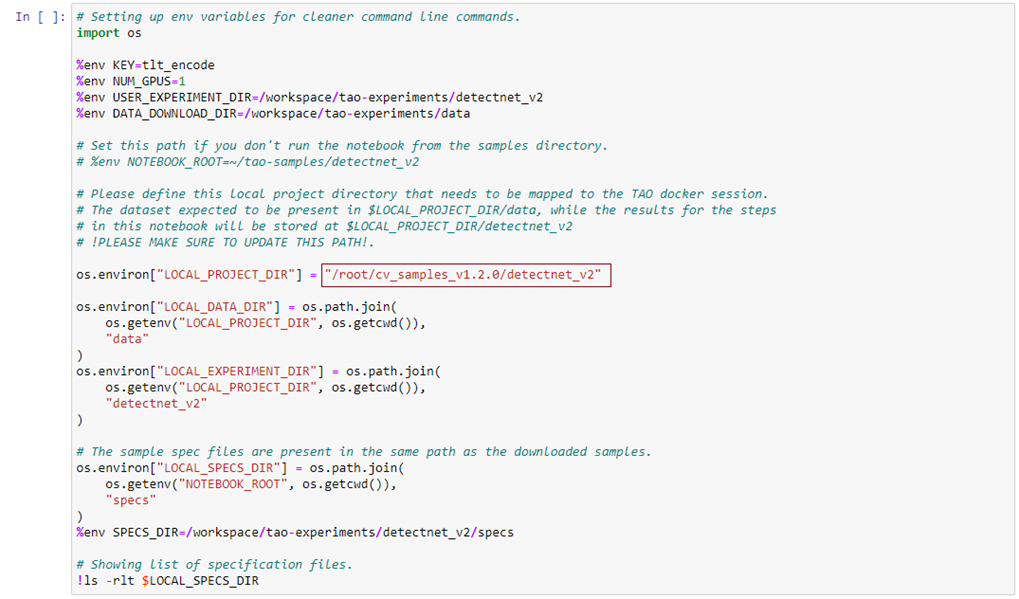

Edit the section with the environment variables for the LOCAL_PROJECT_DIR path:

Because the download url for the images.zip and labels.zip is missing, the jupyter notebook shows an error. However, the .zip files were already copied beforehand, so the error can be ignored. Unpacking the zip files takes a while.

You can step through the section now. Adopt the jupyter notebook for own purposes. Using the pretrained data, here the assignments and number of existing images.

b'car': 4129

b'dontcare': 1574

b'truck': 145

b'cyclist': 226

b'misc': 118

b'pedestrian': 638

b'tram': 67

b'van': 377

b'person_sitting': 23

The dev setup with root privileges is not intended to be used outside a lab environment. So far, all the Nvidia triton and tao material works flawlessly on Photon OS.