Model Context Protocol (MCP) has taken the world by storm and that is understandable. What previously were ad hoc integrations with specific LLMs and data sources can now be replaced with a common protocol that promises a write-once-integrate-with-all-LLMs experience and while the frameworks and implementations are still immature in terms of cross-cutting concerns like security, authentication or logging, the truth is that it opens endless possibilities specially for agent-to-agent architectures driven by natural language.

As a way to explore MCP, I wanted to show how easy it would be to write, in just a few minutes, an MCP server exposing the information from your Tanzu Application Catalog (TAC) to your AI tool of choice giving you an interface to ask questions about your Helm charts and get answers like how to best use and configure them, to find issues, to get hints about the latest releases, to just name a few examples.

A clip is worth a thousand words

Rather than starting directly with the details, let’s see what all the above means. How it does feel to have a personal assistant for your Tanzu Catalog to whom you can ask any questions:

The first thing you'll notice in the above video is that we are using Claude and start asking to the AI several questions about Helm charts. To those questions, Claude will ask permission to use some MCP tools to provide better answers. Those tools are fed with context obtained from the public Bitnami Helm charts and from your Tanzu Application Catalog subscription and hence can provide much better advice than any general LLM.

That’s the gist of it, but now that I've got your attention and if your are still interested in learning more about the details or about how to do this yourself then please keep reading.

Setting up the project

All the source code for this blog post is available on GitHub which has instructions on how to build and run the MCP server. The MCP specification already has several framework implementations both for clients and servers. Those are available in different programming languages. For this blog post we have chosen Spring AI which extends the MCP Java SDK with Spring Boot integrations that provide both client and server starters.

Rather than making this a full blog post about how to use Spring AI to write an MCP server, I think it is much better to link to some of the Spring AI’s team fantastic tutorials like Dan Vega’s build your own MCP server in 15 minutes.

Spring AI makes the integration incredibly simple and that can be summarized in some simple steps:

-

Import the appropriate spring boot starter dependencies into the project.

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-mcp-server-spring-boot-starter</artifactId>

</dependency>

-

Create the MCP application and write a bean that returns Tool definitions

@SpringBootApplication

public class McpApplication {

public static void main(String[] args) {

SpringApplication.run(McpApplication.class, args);

}

@Bean

public List<ToolCallback> bitnamiTools(HelmService helmService) {

return List.of(ToolCallbacks.from(helmService));

}

}

-

Implement the logic for the different tools that will be used to return context to the LLMs

@Tool(name = "get_helm_charts", description = "Gets a list of all the Helm charts available in your Bitnami Premium or Tanzu Application Catalog subscription.")

List<ApplicationMetadata> getHelmCharts() {

return applications;

}

@Tool(name = "get_helm_chart", description = "Get a single Helm chart as long as it is available in your Bitnami Premium or Tanzu Application Catalog subscription.")

ApplicationMetadata getHelmChart(String name) {

return applications.stream().filter(it -> it.name().equals(name)).findFirst().orElse(null);

}

@Tool(name = "get_helm_chart_readme", description = "Returns the README content for any Helm chart from the Bitnami Premium or Tanzu Application Catalog subscription.")

ApplicationReadme getHelmChartReadme(String name) {

ApplicationMetadata metadata = getHelmChart(name);

return new ApplicationReadme(metadata, readReadme(name));

}

But, how does it work?

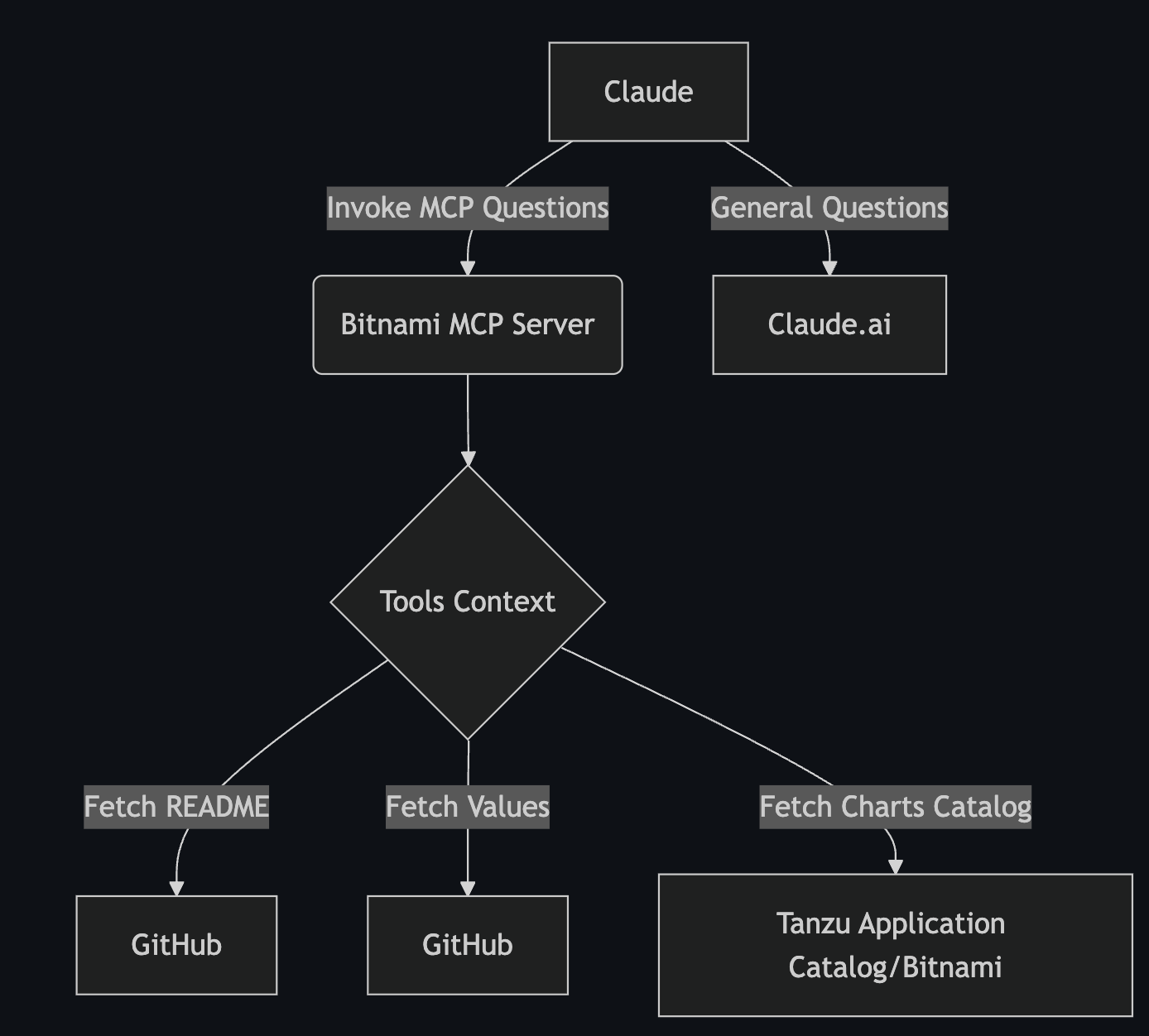

The following diagram shows how our Bitnami Helm MCP server works and in general how most of the MCP server around do work.

At the very top is there the LLM models sit. In this case for this demo we are using Claude.ai. The Free Claude Desktop version is enough to handle some of the questions from this demo clip but it is worth noting that if you want to make questions that require larger contexts like asking about documentation or Helm chart values then you would need to use Claude Pro's version as it does have support for significantly larger contexts.

In the above diagram, Claude is the MCP client. That could be literally any other AI provider or tool, or just a regular application that uses an MCP client framework like Spring AI. Upon bootstrap, Claude will check its configuration for MCP servers and it will reach out to each MCP available server and ask for available tools. Once the tools are registered and initialized, then Claude is smart enough to understand whether the user question needs to be answered via a tool or via the remote Claude.ai service.

Fetching Helm charts information

On the bottom side of the previous diagram we have our MCP server that needs to provide information about the Helm charts. That information goes from fetching all the Helm charts available to getting specific information of the Helm chart like its metadata, documentation or default values. To fetch specific files, we can take advantage of this information being available on public GitHub, which makes the demo simpler.

On the other hand, to show the user the available Helm charts, we need to tailor the request to each specific catalog. That’s where we take advantage of the charts-index file. This file is a little hidden gem inside the TAC OCI registries. It essentially works as Helm repository indexes do, but TAC ships an index (or multiple, depending on your chosen OS) with each catalog. There is an offline example on the GitHub project.

Chart indexes are used to consume information independently of the TAC UI. This is particularly useful in disconnected environments where all you avail of is the OCI registry itself. This charts index is also the foundational tool that is typically used to synchronize your TAC catalog to airgapped or highly restricted corporate environments.

In this particular demo, our MCP server fetches some environment variables that define how to authenticate to the OCI registry and then goes and fetches the charts-index json file from the OCI registry and builds an internal representation of the catalog. All that without access to TAC or the TAC UI, which I believe is pretty neat as it is completely decoupled from the TAC experience.

Configuring the MCP Client (Claude Desktop)

To configure the MCP client we need to actually tell it where and how to run the MCP server. This is largely OS specific and described in the project itself but essentially in this case we end up using MCP’s stdio interface (standard input and output) and run the MCP server directly. We could have run the MCP server remotely and use the HTTP protocol with server side execution support but stdio makes it simpler.

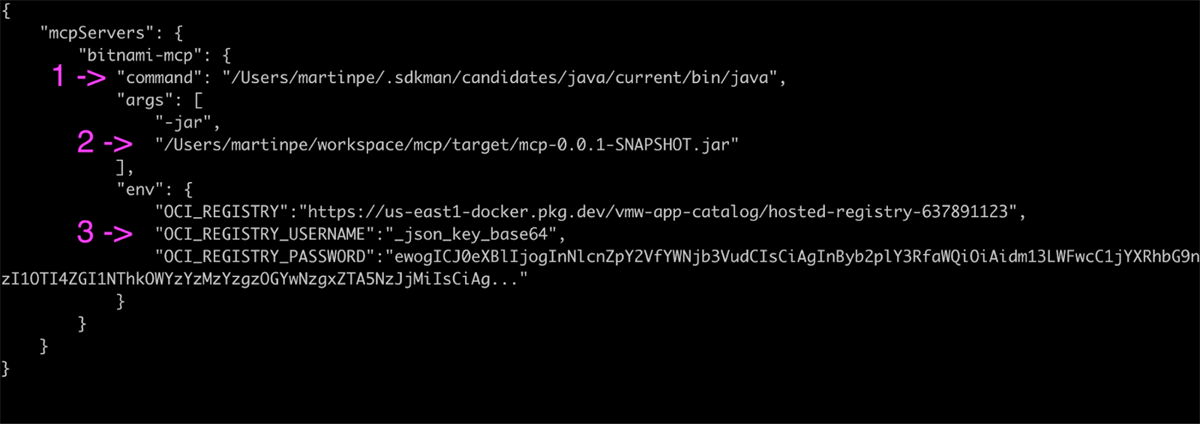

If you try to run this experiment yourself, the Claude configuration will look something like below

Where:

-

Is the absolute path to your Java development kit.

-

Is the absolute path to the MCP server jar file.

-

Is the set of environment variables that define your OCI registry, username and password.

The environment variables tell the MCP server how to fetch the disconnected information about your TAC catalog. If you own your TAC registry, then you probably already know the credentials. If TAC is hosting an OCI registry for you, then TAC documentation has a section on how to obtain credentials.

Those are the credentials that you should use, although beware that you will have to base64 encode the file downloaded from the TAC UI and use _json_key_base64 as username.

What more could we do?

With all that context you should be able to replicate the demo shown at the very beginning of this post. There are a number of things that could be done to improve the above demo. Some of those could be:

-

Consume the whole Helm template and use it to get additional context and suggestions based on the template code.

-

Integrate Bitnami’s GitHub issues, pull requests or changelog information to get better troubleshooting instructions, solutions advice, recommendations or feedback on changes across versions.

-

Implement support to run actual Helm commands like what the Kubernetes MCP server does.

-

Integrate with TAC’s knowledge graph to ask questions about package composition, CVE remediation and troubleshooting, SBOMs and such.

If you are interested about all these topics, all the demo code is Open Source. Feel free to fork it and play with it. Finally, for any advice or if you happen to have any comments or questions feel free to reach out through the usual channels like for example X (formerly Twitter), Bluesky or Linkedin.

#MCP

#AI

#bitnami

#Kubernetes