Don't we all appreciate simple yet powerful software? And how come we find so little? We all appreciate how convenient Uber is, a simple tap and a cab just arrives where you are. Massive intelligence and default settings behind the scenes to make that happen.

But for one Uber, how many poorly designed applications must we tolerate?

Yet, the recipe to create delighting software exists, and has been documented for almost 20 years, I encourage you to read The inmates are running the asylum by Alan Cooper if you want to learn more.

So for CA APM, the challenge at hand is no less than to create an Uber-like experience to manage a full-blown APM environment.

We know the problem and it would seem that we just have to follow the design process properly and do our homework to create this inspiring software product.

Can we succeed?

The team

The team in charge of the APM Command Center is composed of:

Our UX designer champion: Peter. Peter is responsible for most of the UX design decisions, and also created all of the UI prototypes.

Myself: Product Manager/Owner, I also acted as the SME to help Peter get a deeper understanding of cutomer environments and made the final call on design choices.

Our Engineering Manager/Scrum Master Steve (Steve needs to create a public LinkedIn profile and add a pic, but he's kinda shy :)). Steve was responsible for helping us determine what we could implement and what was too expensive and making sure we were not only building the right product, but also building the product right (subject for another blog entry, Steve?).

Our Scrum team, where skills ranges from UI/HTML/Client side Javascript/AngularJS specialists to backend/system gurus.

The Design framework

We are using Cooper's "Goal directed design" process, here's a quick crash-course for those new to it:

1) Interview a bunch of people who:

- Use your product

- You think use your product

- You think should want to use your product

- And try to understand what they are currently doing, why they are doing it, what they would really want to be doing instead, and how they do it today.

2) Synthetize the data gathered during these interviews, find the common patterns in there, and model your different user types into fictional users called Personas. Make sure you understand what their specific goals are.

3) Write the Scenarios your personas will follow to achieve their goals.

4) Flesh out the screens described in the Scenarios, in raw form at first, such as Wireframes

5) Get feedback

6) Iterate on your wireframes.

7) Get feedback

8) Once your raw design is satisfying, you can create high-fidelity screen representations (Note: You can jump directly from 4 to here if creating high-fidelity screens is cheap for your organization. We happen to use Invision to share and collaborate on our designs, which is absolutely fantastic)

9) Get feedback

10) Once satisfied, hand out the spec to Engineering!

That's pretty much the theory, let's see what happened in real-life when we created the APM Command Center...

Persona

As every (hopefully good) story, ours starts with a main character: Kyle Thomas, the APM Administrator of TicketsNow, a leading provider of concert ticket processing for small to medium concert venues throughout the US and Canada:

Consumers can purchase tickets through both the TicketsNow website and its telephone call center. TicketsNow also provides promotion for bands and venues, as well as onsite electronic ticket validation and processing.

In such a fast-paced online environment, updates to the TicketsNow applications are extremely frequent, and so are the fires that IT has to fight.

TicketsNow wants to improve their IT infrastructure, but have a diffcult time seeing how to move forward. It seems like they’re always fighting the next fire.

The IT department is understaffed, and relies on their development teams to help provide rotating production coverage.

Kyle is the sole APM administrator for the production environment. As the specialist in APM, he’s often called in to install monitoring after an application is deployed to production, or to assist in diagnosing production problems: "I get so many requests for help with monitoring, and it feels like I have to roll a new solution for each team. I can’t keep up.”

Kyle controls most of the APM lifecycle: instrumentation, dashboards, threshold setting, and alerts. Application developers for each app are on call to solve production issues that ops can’t solve

Kyle would like to:

+ Enable others to take advantage of APM technology

+ Stop answering the same questions over and over

+ Automate more of the monitoring process

+ Get developers to pay attention to monitoring before their applications reach production

+ Offload simple monitoring configuration tasks to the app teams

What he needs:

+ Understand who is using the monitoring system, and what changes they’ve made

+ Get people the playground to work in without disturbing other groups or the overall operation of the monitoring system

+ Needs the process of standing up monitoring to be simpler, so he can respond to more teams’ requests

His pain points:

+ Watching the performance of the monitoring system to make sure that the instrumentation levels won’t crash it

+ Putting all the instrumentation in place, because regular expression patterns are too difficult for the teams to learn

+ Manually configuring the monitoring servers consistently, when the data is practically the same for each

+ All of their “best practices” are based on their own trial and error

Okay, Kyle that's great, we know who you are, what are your goals and needs, so what's the next thing we should do for you?

That's sort of where the Design process falls short sometimes. You can create this cathedral in Wireframes that will take you 5 years to build, but it won't magically tell you which functionality will matter most and which one you should develop first when you've got a release in 6 months. For that, we have had to:

1) Create a list of all the things we want to implement

2) Validate the priorities with real users

3) Map these priorities with the size of the work being requested

4) Decide of the scope of the first release

Once we had a scope firmly identified, we were able to start fleshing out scenarios.

Scenarios

You may think that we have hundreds of pages describing things in great details? We don't.

Scenarios are typically quite short and convey only the relevant information in a typically short form.

Here's an example scenario for the first release of APM Command Center:

Kyle is diagnosing a configuration issue

Andrew (the developer Persona working at TicketsNow) calls Kyle and tells him that he installed an APM 9.7 Agent manually. He explains that he migrated an old 9.1 Agent configuration to 9.7, and that he probably did something wrong but he can’t figure out what. Andrew ask Kyle if he can help him. Andrew has to go to a meeting now, and he’ll be available in a few hours.

Kyle thinks that Andrew should have worked with him instead of doing that stuff on his own and now he has to fix his errors.

Kyle finds the specific agent, selects it and then chooses to get a Diagnostics report.

Kyle now sees a full report containing details about the Agent environment, including log files and configuration files.

Kyle opens the IntroscopeAgent.profile, and sees that spm.pbd has indeed been added to the list. He checks in the Autoprobe.log and sees that spm.pbd is indeed being loaded.

Kyle doesn't understand why Web Services are not showing up, so he thinks he needs to open a ticket with CA support.

Kyle sees that he can pack up the report in an archive file, and thinks that would be very convenient to send to CA support an exact copy of what was displayed on his screen, along with all the files included in the zip file.

Kyle opens a ticket on CA support, and attaches that file to the case.

For the first release of APM Command Center, we had only 2 scenarios! The second one covering the ability to change the log level of running Agents.

UI prototypes

Whether you choose to start with low-resolution wireframes or go directly to high-fidelity prototypes, the important part is all the feedback that you get when you start showing these to Stakeholders.

Here is one of the very firsts screens that we created:

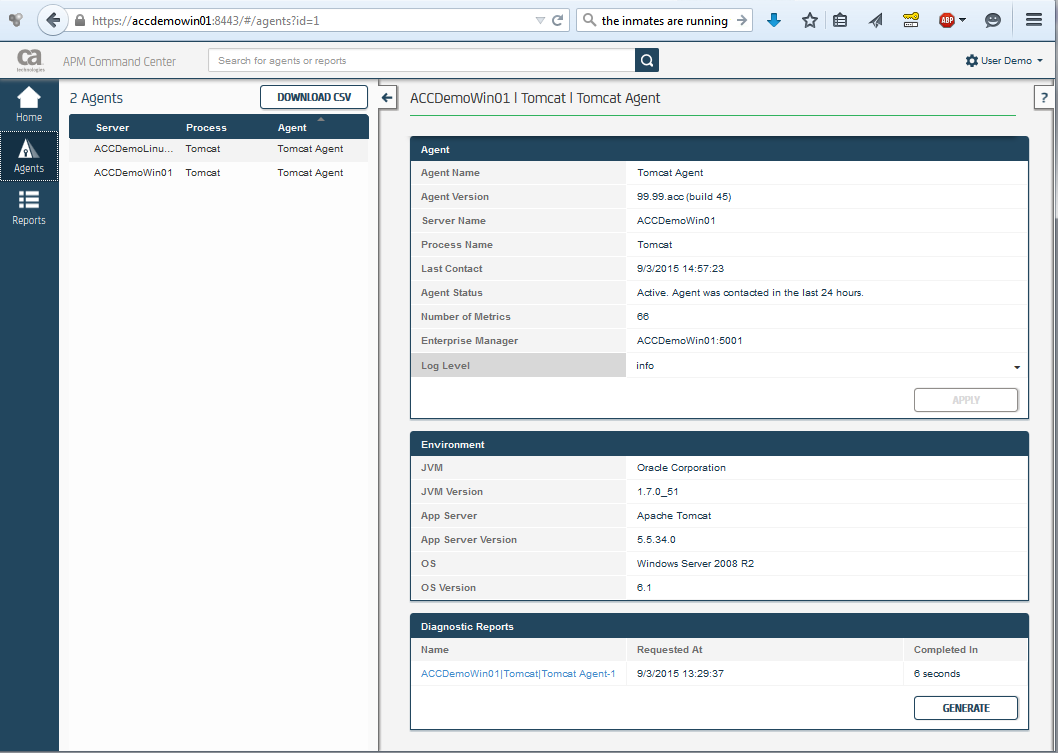

And now to the end result:

Surprisingly similar, hey? Seems easy, but from these simple screenshots, I'm going to reveal all the excruciating little details that you can't see!

Converting the design into actual software

We use the Agile Scrum development process, so we had to transform the design vision into a prioritized list of user stories, that our development Engineers could tackle Sprint after Sprint to impement the software.

I love user stories, they're really great because they both describe the functionality that we want to develop and at the same time explain why the functionality is valuable for the user. One of the limitations of user stories is that they're typically quite short on purpose to encourage discussions, but when you're trying to design a pixel-perfect product it can get quite challenging, let me give you an example:

Let's say you want to create some user stories to implement the search box, here's what you need to do:

1) Design the REST API that the UI will call. This product will be beautiful on the outside and on the inside, so the REST API has to be elegant too, not just whatever our programmers can produce the fastest. Implement it, you will also need some user stories for that.

2) Start with a simple User Story: "Kyle wants to search for Agents and Reports, so that he can access the information he needs faster". Throw in a link to the design prototype and the Engineers should know what to build, right? Wrong. How are they supposed to know if they have to place the search box relatively or absolute in pixels in the header? Should it be aligned with something else? What happens when the user clicks away from the search box? Can the user navigate the search results with the keyboard? (Yes you can) Oh you want an "X" to clear the search? You're going to need a new story for that. Do you want Agents to appear before Report results? What about your last queries? Oh you want to change the icon when you click on the lens? When you hover on it too? Does your lens icon have an alt-text attribute for visually deficient users? You want the search box to stick when you navigate away? All these things don't just happen magically, they need to be specified somewhere, either in new user stories, by adding details to the acceptance criteria or by filing defects.

3) So you made a mistake when you first designed the REST API? Well, no one's to blame, APIs are hard to design (maybe we need a design process for APIs, any takers? :)) Ok, well we're going to fix it, but the UI will have to be rewired to the new one and so will the automated tests.

4) Show (and ship) the software to alpha testers, get feedback. Of course the real software will reveal usability problems that a UI prototype can not, so you will have to fix these, more stories, more defects, etc...

This is probably the hardest part. It's a tremendous amount of help to have a proper design that you want to implement, however it takes real work and commitment to:

- Stick to the scope. Everyone wants to add features all the time. It's been said before, but the hardest part of being a Product Manager is probably to say "No". Engineers want to add features "because it would give users more control", users ask for enhancements/changes because of their particular constraints and don't necessarily think about other users, Sales ask for more "****/selling" features that look nice once but never really get used, Consultants ask for "Customizability", Management asks for corporate "Abilities support". Anytime you yield, you usually extend the release date and frequently complicate the product. It's a hard job to keep the product simple with all those demands!

- Reject a feature. Sometimes you seem to get new features "for free" because you're using a framework that gives you all these capabilities at almost no development cost. Sometimes the feature just won't solve the problem we're trying to solve or complicates the product too much. It's very hard to throw good work in the basket.

- Accept your users feedback when your original design doesn't work once it's been implemented, and decide to fix it.

- Be obsessed about details. It takes several pairs of eyes to detect visual anomalies or edge cases that break the flow.

- Iterate, iterate, iterate. You just can't get everything perfect the first time!

What was really hard?

Did you notice:

- That when you use the search box, the url gets updated accordingly? Same thing when you select an Agent or a Report? Even downloadable report zips?

- That the search box searches across the whole product (currently Agents and reports but more in the future)?

- That the UI is usable both on a 1024 pixels width desktop and a 1920 or more one?

- That when you create a new user, the password gets immediately removed from the file and encrypted in the DB?

- How nice the final UI looks? The attention to details we paid, how everything is aligned, the font being used, how visible the information is, that the main action buttons are visible in high contrast?

- That the product is fully internationalized (even though not fully localized yet)? That we don't just use icons for visually deficient users? That all images have an alt-text?

- How hard we strived to make every line of text simpe yet understandable and accurate? That all error messages are associated with a specific code described on the wiki? That for each error we try and give you some potential root causes and things to investigate?

- That we try as much as possible to show you errors directly in the UI so you don't have to open log files to see what's wrong?

- That the width of the columns and sort order are preserved when you navigate?

- That we store your last search queries for easy reuse?

- That the help menu is contextual and changes depending on what you're looking at?

- How simple the Config Server and Controller installs are? Unzip, run a command and you're done? That you can run these with non-Admin service accounts?

- That the scripts we use to fetch information on the Controllers get automatically pushed from the server whenever a new version is available?

- That we use an embedded DB for hassle-free install and maintenance? That the data get purged automatically so you don't have to bother?

- That every resource has a direct link that you can copy-paste to send to your coworkers?

- How powerful the search is? The search is at the heart of the product, did you know that we use the search internally to find disconnected Agents or Agents sharing the same profile?

- That the UI works perfectly on Chrome, Safari, Firefox and even Internet Explorer from 9 to 11?

- That the product uses a REST API behind the scenes, and that pretty much anything you can do in the UI can be scripted? We're looking at publishing and supporting this API in one of the next releases.

- That the communication channels are all documented, use HTTP, ports can be changed, and support proxies?

If you noticed it all, congratulations! You can see the wires behind the magic, you should consider working as a software Designer

All in all, it's not "hard" per se, but it does require a significant amount of work to make all these small things work great and flawlessly together.

That's it, I've told you most of the magic behind ACC 1.0, what do you think? Do we deliver on promises and is the user experience as good as we hope it to be?