There are many things to consider when modeling load on a Gateway. Often our customers ask for upfront assistance when determining how many Gateways are desired, however, this is an ongoing exercise! Customers need a repeatable way to gauge the impact of onboarding a new set of APIs and determining impact on shared Gateway environments. Outcomes of which might be to create a "fast lane" "slow lane" set of Gateways (or dedicated thread pools in shared environments) for those APIs with little to increased (respectively) latency in API processing and/or Gateway processing (e.g. transformations, encryption, etc.).

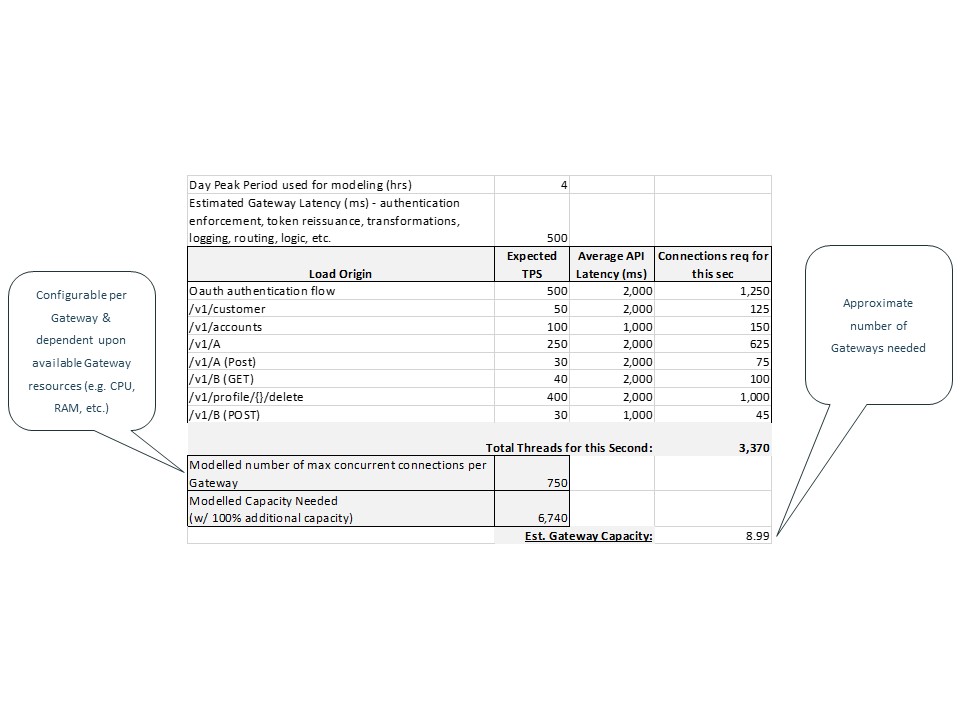

Part of this repeatable process includes profiling APIs during onboarding (potentially a later blog)? However, the goal of this discussion is to present an approach for determining impact based upon an important "long-pole" factor - Thread Exhaustion:

While there are many other variables in analyzing Gateway performance and capacity, Thread Exhaustion is a leading factor we at CA Services have observed over the years. Additionally, it's possible to include other considerations by factoring them into the above numbers. For example, if one of your endpoints is accessed largely over mobile networks with increased latency, you can increase the either the latency for that endpoint or the overall Gateway latency as the ultimate affect is that the thread cannot be released until the last packet is received or written back to the client. As another example, if you have a client that sends multiple requests (e.g. HTTP OPTION calls) per transaction, you could increase the TPS modelled per endpoint.

In conclusion, it's important to establish a repeatable process for ongoing analysis of gauging impact of API onboarding. The above tool is one example, and a start from which your organization could expand per your API processing and consumption characteristics.